What is a Deepfake?

by Oliver Goodwin | May 3, 2022

Reading Time: 10 minutes

Does the possibility of actors being present on set despite temporal and/or spatial gaps ever bewilder you? For instance, after the demise of Paul Walker in 2013, we got to understand that he’d left behind incomplete scenes. Yet, upon the release of Fast & Furious 7 in 2015, his scenes had been “miraculously” stitched to completion.

Are you wondering now how this “magic”, which does not obey time, physical distance, or mental proximity, was conjured? Well, it shouldn’t come as a surprise that daily, technology keeps breeding newer, faster, and more productive means to endless ends, and this technology–Deepfake Technology–that we’re being particular about here is one of such means.

What is Deepfake Technology?

To explain Deepfake technology, we must begin with the elementary – Synthetic Media.

When Artificial Intelligence (AI) is used to generate, manipulate, or modify media varieties such as videos, audio recordings, and images, the resulting aggregate is synthetic media. In furtherance to this, synthetic media comes with its subsets, each defined based on its use and impacts. Deepfake technology happens to be one of these subsets.

Deepfake is a portmanteau word that melds “Deep” from the deep learning branch of artificial intelligence technology and “Fake”. It’s a technology that leverages artificial intelligence to create falsified but realistic or near-realistic media content such as video and audio files.

To better understand this concept, consider the scenario of two fictitious men, Harry and Nick: You want to create a video of Harry singing the England National Anthem without making him do it because of his unavailability. Deepfake technology makes this a reality by superimposing a video of Nick–who is available–singing the National Anthem on random videos of Harry. This manipulation results in a falsified video in which Harry appears to sing the National Anthem.

In essence, deepfake technology is a branch of synthetic media used to deceive or mislead by concocting visuals or acoustics that never happened. This specific negative tag draws its source from the origin of the name “deepfake” itself when a Reddit user monikered “deepfakes” proclaimed in 2017 that he had built a machine-learning algorithm that could superimpose celebrity faces onto any porn clip of his choice.

How Does Deepfake Technology Work?

To make deepfakes, one needs to possess at least three molecular components of the technology’s functionality:

- the target media,

- the artificial neural networks,

- and the source media.

The target media is that which one intends to manipulate. For example, in the case of a video file, the target video is one upon which the transposition occurs.

On the other hand, the AI algorithm studies the source media to transpose it onto the target media easily. In the examples cited above, the target is the existing video footage of Nick singing the national anthem: in this case, he’s the actor whose voice is used to underpin Harry’s face.

Conversely, the source is a medley of different and multiple clips of Harry performing random actions from different angles, wearing different emotions, posturing in different light weights, etc. The AI algorithm studies these clips to master nearly every aspect of Harry’s physical appearance to flawlessly stitch these aspects onto Nick’s corresponding features.

We shall discuss the third essential component, Artificial Neural Network, in more detail below.

Related Article: What is Voice Cloning?

What Are Neural Networks?

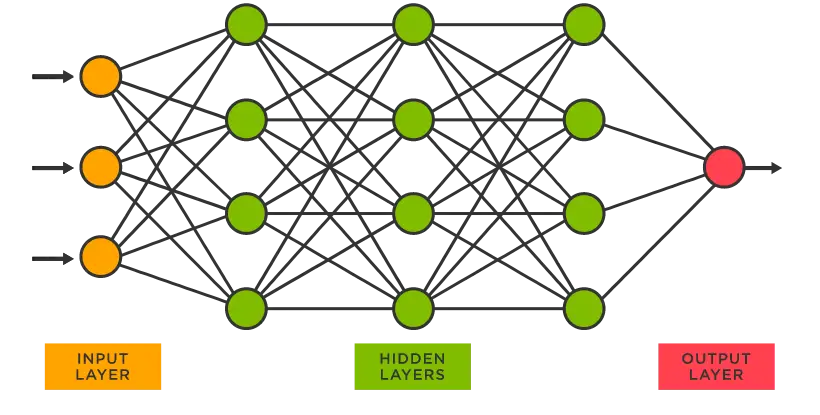

Artificial Neural Networks (ANN) or Neural Networks (NN) are a variety of deep learning and, consequently, AI and are a composition of hardware and/or software units modeled after the workings of neurons in the human brain.

ANNs have been applied in variegated commercial ends by generally focusing on unraveling complex signal processing or pattern recognition challenges. These commercial outputs include, but are not limited to, face recognition for security systems, handwriting recognition used in the processing of checks, AI-voice generators, weather prediction, etc.

How Do Artificial Neural Networks Contribute to the Creation of Deepfakes?

ANN systems and the human nervous system function similarly, and this shouldn’t come as a surprise since the former is patterned after the latter. The system involves many processors that operate in parallel formations or tiers.

The incepting tier–which can be likened to the human optic nerve–receives the raw data (retina information, for example) and passes it on to the next tier–similar to how neurons from the optic nerve receive signals from those close to it.

These networks are then trained on pieces of unified information we need to recognize. For instance, if we wanted to train our ANN on the visual information of Chris Evans, we’d initially feed into a series of fragmented as well as whole images of the actor from multiple angles, then tell the system what each fragment is and what the output should be.

The algorithm is programmed with specific instructions on how each piece of information is handled. And because ANNs are known to be adaptive, they can self-adjust to accommodate and strengthen their knowledge based on the information fed into them. If, in the process of training the system on the visuals of Chris Evans, we told specified specific parts to be identified as “Chris Evans’ lips” and any other part as “not Chris Evans’ lips”, then it would obey this instruction unless different nodes repeatedly tell it this is not the case.

With this understanding, we can safely circle back to our first instance of Harry and Nick as source and target, respectively. The deepfake of Harry singing the national anthem can be implemented using either or both of these different machine learning programs: autoencoders and Generative Adversarial Networks (GANs).

1. Autoencoders

Autoencoders are a variant of neural networks that create deepfakes by compressing inputs into littler representations of themselves and regenerating these original inputs of these representations or encoding. A practical parallel would be a student comprehending their homework rather than simply memorising the solution. Consider two students who have been given homework. Student A memorises the solution to the homework without grasping how to solve the assignment. Student B takes their time to study the homework, breaks it down into simpler terms, and pits the fragments back together without reaching into memory, thereby exercising perfection. If they were given similar questions in a test, with the parameters slightly altered, it’s quite obvious that Student B would ace and Student A would be more likely to fail.

Autoencoders work just like Student B above. Trained to not just repeat the media files injected into them, but to recognise each detail and be able to stitch each detail decoded to any target.

2. Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are another subsumption of neural networks that run unsupervised polar submodels: the generator and the discriminator. The generator creates fake visual or acoustic outputs from the source it is trained on. The discriminator determines whether these outputs are legitimate and either admit these outputs or rejects them based on its legitimacy judgment.

The cycle continues until the generator develops output legitimate enough to bypass the discriminator’s measures. In summary, the GAN models exist to correct the flaws that may be present in autoencoders.

Are Deepfakes Only Videos?

The assumption that deepfakes exist only in video content is plausible since most cybercrimes involving deepfakes are videos.

There, however, exist other sides to visual modification and falsification. But, as you can guess, the AI audio deepfakes are ostensibly widely overlooked in the survey and study of media manipulation.

Audio deepfakes, essentially, are the cloning of a person’s voice to generate synthetic audio. In most cases, this cloned voice is indistinguishable from the original voice. Audio deepfakes can be achieved using replay attacks, speech synthesis, voice conversion and manipulation.

Besides audio deepfakes, some other forms of deepfakes, which aren’t exactly prominent but are nevertheless existent, should be considered and offered some degrees of legitimacy: text deepfakes which use exposed fabrication, humorous fakes, and hoax fakes; and image deepfakes such as AI avatar, face swap, synthesis, and editing–photoshop, essentially.

How Deepfake Videos Are Made?

Deepfake videos are made using, majorly, machine learning. As we’ve explained earlier, the creator of the deepfakes trains a neural network on many hours of actual videos of the person (the source) as required. The neural network, having been trained to recognise the source in whatever lighting and angular condition using either the autoencoders or GANs, is then trusted to reproduce any video given the separate details of the source video. The reproduction is then transposed onto the target’s actions, resulting in a falsified deepfake video document of one person who seems to say or do things that another person said or did.

What Technology Do You Need To Create Deepfakes?

The technology behind creating deepfakes includes the two subsets of neural networks: autoencoders and GANs. These systems, including the wireframe of their parent network, that is, the neural systems, were heavily researched by Geoffrey Hinton– who made significant contributions to the advancement of AI–and the neural networks, it turns out, were designed to mimic the human brain. That is, these two do not run on simply memory and submission of results but on the ability to make meanings of tiny details similar to fragments of their memories–the way the human brain does it.

How Do You Detect Deepfakes?

Deepfakes are created by tools that aim to perfect face or voice recognition and recreation using decoding and encoding means such as the generative adversarial networks. These networks take time, thousands of data files, and manual expert contributions to yield the desired results. The effort, the measures taken against detection, and the corresponding quality of work generated determine the ease or difficulty with which deepfakes can be spotted.

There are two prominent means of detecting deepfakes:

- manually studying the suspicious deepfake or

- the use of Deepfake detection software or tools.

The chirality of events and possibilities powers technological advancements. The sustenance of these signs of progress relies on checks and balances. For every potentially dangerous invention, a polar opposite is designed to check it. Since the advent of deepfakes and the discovery of their dangerous capabilities, there have been attempts to develop counteracting measures for them.

Deepfake detection tools are the easiest means of identifying deepfakes. Some of these tools are web-based, such as Facebook’s enforcement against manipulated media. Some others come as software to be installed on computers, such as the Microsoft Video Authenticator tool, which takes a video clip and gives a percentage-based authenticity score as to the likelihood of the clip being a deepfake. Another software, Deepwater, functions just like the Microsoft Video Authentication tool. Some other tools and automatic techniques include biological signals, phoneme-viseme mismatches, using facial movements, and recurrent convolutional strategies.

Manually detecting deepfakes is much more tasking. This process requires utmost meticulousness and sometimes luck. This involves studying incongruous attributes such as unnatural eye and body movements, unnatural facial expressions, absence of emotion, unreal hair and teeth, blurred aspects, misalignments, and inconsistent audio. In addition to these observations, one can make reverse image searches.

3. Improved Customer Experience

With text-to-speech technology, businesses experience minimised workload on staff and enhanced personalised customer experience. TTS, with AI robots, helps businesses pass the necessary information to their clientele fluidly with few expected errors.

How Dangerous Are Deepfakes?

While deepfakes come with their special packs of merits in arts for film creation; in research such as medicine where you can use AI generative technology to make fake brain scans on the real patients; and for identity protection such as in interviews.

The dangers of deepfakes, however, are insurmountable. Below we discuss some of them and their social, political, and economic impacts:

1. Revenge Porn

The list of ills that deepfakes bring can never be complete without mentioning its pornographic application. The very origin of the name “deepfakes”, as we’ve mentioned earlier in this post, springs from its use in the malicious creation of pornographic content. To put this in perspective, research shows that pornographic content online in 2020 contained 96% of deepfake videos, which means deepfake pornography was made to tarnish the image of its victims. This puts the reputation of female celebrities, especially, at risk of absolute damage if not controlled.

2. Phishing and Scamming

People can use Deepfake technology to create fake videos of persons of financial authority to prise money from them. In 2020, Forrester predicted the cost of deepfakes scams to be $250 million.

3. Legal Evidence Compromise

Another danger of deepfake technology is its application to the legal system, where people can use it to alter, tamper with, and deny criminal evidence. For example, between 2019 and 2020, during a child custody battle in a British courtroom, a woman presented doctored recordings of her husband being abusive. Thanks to the husband who could prove otherwise, justice would have probably been served wrong.

4. Medical Infrastructural Compromise

The positives of deepfakes can also double as a demerit. Earlier, we mentioned how deepfakes could be used to create false brain scans to help study a patient’s tumour condition. When this application is broadened, it can be used by persons with malicious intent to deceive medical facilities into believing the wrong radiographic information about a targeted patient.

5. Political Propaganda

Political opponents can use Deepfakes to influence the public and sow fear and distrust.

In 2008, 2012, and 2016 respectively, Barack Obama was recorded telling a small gathering of people that residents of hard-hit regions often resorted to religion and guns; Mitt Romney was recorded saying 47% of the nation’s population were content with depending on the government for basic amenities, and Hillary Clinton was observed shunning a group of Trump’s supporters and reducing them to “deplorable”, all of which were eventually found out to be fake.

The political intricacies and consequences of deepfakes, if not efficiently managed, are capable of leaving a dent in global democracy.

Is Deepfake Technology Legal?

The legality of deepfakes is subject to certain factors specifically tailored to stave off the victimization of potential possible victims. In December 2019, the World Intellectual Property Organisation (WIPO) published its Draft Issues Paper on Intellectual policy and Artificial Intelligence. As it stands, deepfakes have not been permanently marked illegal at least in nearly every country in the world. China is among the microscopic few countries taking active actions regarding the prohibition of deepfakes.

According to WIPO, if the contents of deepfakes completely contradict the victims’ lives, the contents cannot be granted copyright protection. In the same draft, WIPO states that if a deepfake content is irrelevant, inaccurate, let’s false, it should be erased expeditiously.

Deepfakes aren’t exactly illegal, but caveats around their usage, publication, and creation are in place.

Wrapping it Up

While deepfakes have harmless applications, the effects of their malicious usage exceed their merits, raising concerns regarding cyber security, digital content verity, global democracy, and individual privacy.

When in 2019, the number of deepfake videos found online was 15,000; according to Deeptrace, this was double the number found nine months earlier. Furthermore, 96% of these videos were pornographic, and 99% of these pornographic videos made women their victims.

The statistics show a staggering multiplication growth rate of malicious deepfakes. If governments worldwide do not actively address these concerns, who knows how deep the digital future will be into fakery?